On August 25th, Loop54 hosted another great hands-on webinar. This time, we talked about A/B-testing. It is our pleasure to provide you with a short recap of this fruitful discussion.

We also want to express the warmest thanks to our webinar speakers

- Sophia Båge, e-commerce Growth Strategist at Creative Unit

- Lukas Rogvall, Senior Sales Executive at Symplify

- Adam Hjort, VP Customer Success at Loop54

for making this webinar so lively and informative.

Let’s start.

Why A/B-Testing Is Important and Beneficial

Through all our many years of experience in A/B testing and conversion optimization, we often observed the same concerns among our customers:

- Why do A/B-testing at all?

- How to select the right approach for evaluation of the final results?

The goal of any A/B-testing is twofold. First, you are trying to understand customers’ pains and gains and what they are looking for. Then, based on these observations, you create optimized content, such as great-looking product descriptions and images, that should help customers to find the right products and take them to the cart.

Besides, why not use the advantages of digital business? With a physical shop, you never get a chance to track and analyze all movements of your customers. With an e-commerce website, you can do this.

On the other side, even web designers can get better argumentation for their pay raises since, eventually, they can precisely evaluate the outcome of their efforts. But, to stay serious, A/B-testing does have a positive impact. In our experience, it can be only a several-percent increase in conversions that, nonetheless, may mean up to a 300% increase in ROI.

Examples of A/B-Testing

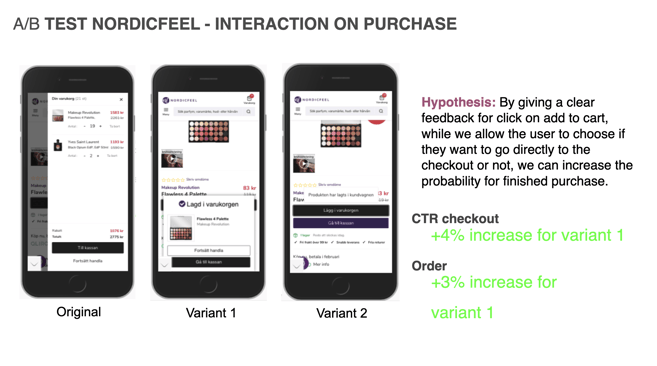

Our speakers shared with us a few fascinating examples of successful A/B-testing from their customers.

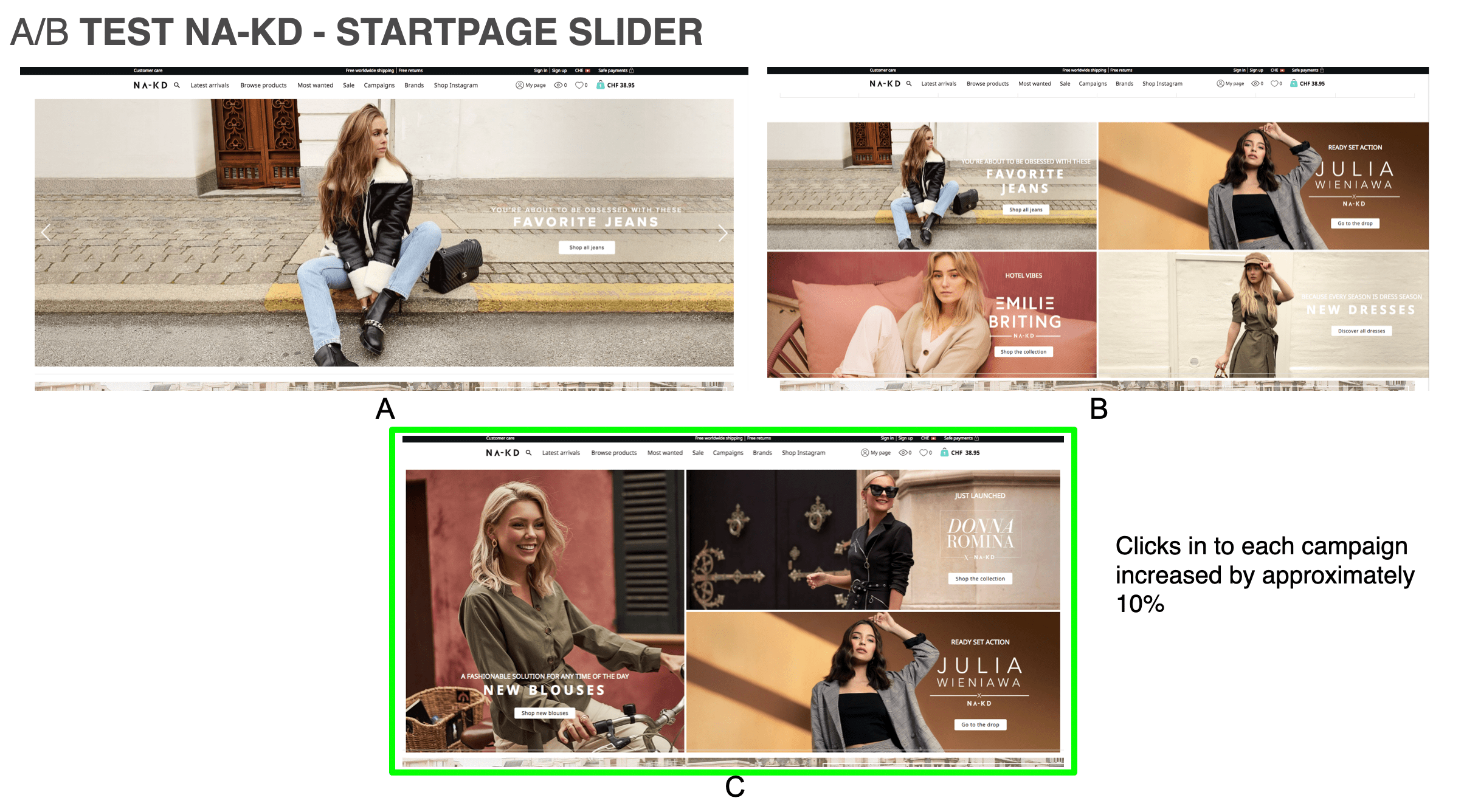

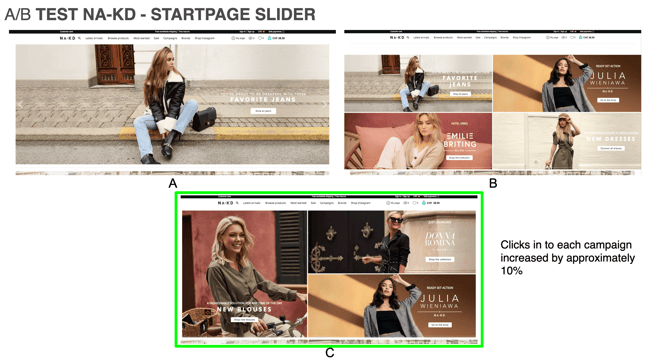

NA-KD is a rising star in online shopping. For most online stores, the home page is less important and generates not so much traffic. On the contrary, NA-KD has a lot of returning customers. For this reason, the start page is critical for conversions, and the A/B-testing was tailored to improve it.

NA-KD uses a strategy of bringing out collections that feature a hero: a self-confident and attractive young woman. Three versions for the start page were drafted, with the photographs of the heroes varying mainly in the type of banner: static vs. carousel. The intention behind these choices was to test which type of visual solution would help customers to get quickly to a campaign.

The test took only 1 hour to set up and brought about 10% more clicks for each of the campaigns.

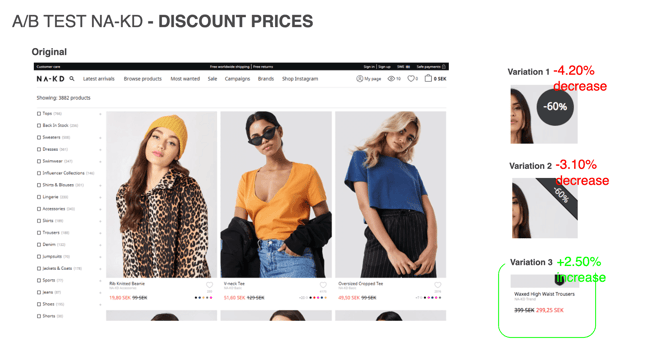

At other times, NA-KD and Lego tested different solutions for displaying the discount price: percentage vs. absolute price vs. a combination of both. The hypothesis was that customers prefer to see the final result instead of having to calculate it by themselves. The idea was confirmed: a 2,5%-uplift in conversions was observed for the solution with the discount shown as the original and final prices next to each other.

Starting With A/B-Testing in Your Company

Different companies have different challenges. However, what a lot of online businesses have in common, is that they need support not only in the purely technical issues but also when it comes to introducing A/B-testing in the company. It is not that easy to prioritize A/B-testing in the team, make it stand out among other routine tasks.

Apart from this, small businesses mostly suffer from not having enough manpower and not enough traffic. Indeed, they should start slowly with the tests. Two tests per month may suffice for the initial phase. As soon as you have more data or you are ready to deploy some radical changes, you can increase the number of tests.

In general, tests need time to generate insightful data, such as traffic and conversions. It makes little sense to test variations for a website that you are going to revamp completely quite soon or for a campaign that runs only for one month.

Big companies may have enough resources for continuous testing. But they are not secure against pitfalls. We will go through them in the next section.

Pitfalls and How to Avoid Them

The Journey Begins – recommendations where to begin

A/B-testing is a sensitive instrument: you can quickly start overdoing things! In the past, a big company wanted to start with A/B-testing. Instead of working out a testing strategy together with the agency, the analytics department of the company spent three or four months proposing hypotheses.

This created a huge time lag for the results of the actual testing.

We recommend starting with small tests and insignificant changes, set up the testing process, gain velocity and first data, and then go for deeper insights. 65% of your hypotheses will prove wrong but this makes your approach more precise and fruitful in the future.

Thus, A/B-testing must be a long-term measure. The minimum duration of one test depends on the amount of traffic the website can potentially generate but four weeks must be the shortest duration. This allows for covering monthly variations. Longer tests are highly recommended for more impactful solutions, such as changing the checkout procedure.

Not Enough Data?

The lack of data — expressed as traffic or conversions — is a big issue, though. When you have too few finalized purchases, try to look at CTRs instead or to how many times your products were put into the shopping cart. If your website has too few visitors, go for the qualitative methods, such as interviews and discussions.

Another method to get more data is to test larger changes. Remember that any test data is better than no tests and no data.

However, in trying to get more out of it, be careful with overaggregating your data. What works for one regional market, or device, or browser, may not work for another. And always test how different variations perform on different devices to avoid false conclusions.

Slowing Down But Keeping the Trust

A very mean pitfall is that your success rate will decrease after some time. You will get neither of your hypotheses confirmed or rejected. The reason is that, in the beginning, you collect low-hanging fruits, changing and testing quite obvious things in the website design. But sooner or later, the pool of impactful ideas will be exhausted.

At this stage, you will be confronted with the old issue: the trust of your stakeholders in A/B-testing. This one is worth a separate section.

A Need for a Data-Driven Mindset

A proper mindset is very important. Even before A/B-testing stops bringing breathtaking results — when it first becomes rather moderate in its findings — it is essential to remain transparent and keep presenting insights about your work.

But why trust is a problem at all?

Data-Driven, Growing, Risk-Taking, Unbiased: Your Ideal Stakeholder

According to our observations, although companies tend to say that they are data-driven, more often than not, their routine practices prove the opposite. We have a possible explanation for this. Companies used to design new features and then launch them directly; it is difficult to make them introduce a new step in between.

Some businesses still need a growth mindset: a readiness to invest money into something with an uncertain outcome. Instead, they are naturally afraid of being wrong and stick to the old-school attitudes toward marketing budgets.

But what should do if your stakeholders refuse to believe numbers showing a positive impact of A/B-testing? You need to understand that a lot of people have a confirmation bias. They cannot accept what they see if it does not align with their previous beliefs. A lot of executives and operative managers are used to a waterfall model, but A/B-testing is dynamic and interactive. It requires a certain degree of digital maturity to live it.

How to Grow Trust and Launch Impactful A/B-Testing

We recommend a few tricks to overcome the lack of trust.

During the Initial Phase – work with three scenarios

The number one best practice is expectations management. Before starting any tests, it is better to have three scenarios: the worst, the best, and the status quo. By following this practice, you can avoid being down-prioritized in resources if your tests do not achieve the best results immediately.

We also recommend starting with user observations to identify obstacles that visitors confront when they use the website. Then, you can move to creating a hypothesis and then a prototype.

You can test the prototype even before the actual A/B-testing. For instance, you can use your marketing channels instead of changing visuals directly on your website. Email marketing or Facebook are great to test new styles in pictures. It is important to break bigger chunks into smaller pieces.

When Reporting Your Results

First, you can support your numbers with the recordings of user interviews. Second, you should go for the low-hanging fruits when you are only in the beginning. This will generate a more visible impact.

And you should never allow your perfectionism to hold you back but instead be careful in communicating this impact. You have to explain to your stakeholders that the primary aim of the testing is to get to know users better. Talking about uplifts and increases will create too much pressure on everyone and should be avoided.

Conclusion

The more tests you run, the more precise and successful you become in your hypotheses and results.

We hope to have enlightened you on the controversial issue of A/B-testing. The webinar contained even more interesting insights but we do not want to exhaust our readers. By the way, our webinar listeners could ask questions and even take part in a short real-time voting! If you want to experience our interactive discussions, look into our webinar calendar. See you at the next webinar at Loop54!